Introduction

Generative AI has seen a surge in interest over the past few years, with a plethora of applications ranging from content creation to complex reasoning tasks. Recognizing the growing demand, AWS has introduced its managed service, Amazon Bedrock, this week in Europe. This promising new service offers streamlined access to cutting-edge foundation models from esteemed AI startups as well as from Amazon’s own treasure trove.

What’s the Buzz About?

Amazon Bedrock is distinguished by its serverless approach, ensuring users can effortlessly kick-start and customize foundation models using their own unique data. Even better? The seamless integration with AWS utilities allows developers to embed these models directly into their applications. The best part is users needn’t fuss about the intricate details of the underlying infrastructure – Amazon Bedrock handles that for you!

Getting Started with Amazon Bedrock

Currently, the service is available in selected AWS regions, notably us-east-1 (N. Virginia) and eu-central-1 (Frankfurt). However, it’s crucial to note that the model availability does vary. For instance, while us-east-1 offers a comprehensive suite of all the models, eu-central-1 currently limits users to models from just Amazon and Anthropic.

To dive in:

Request Access: You begin by requesting access to your chosen foundation model. Once AWS forwards your request to the model owner (like Anthropic or Stability AI), you’ll be on standby until the green light is given.

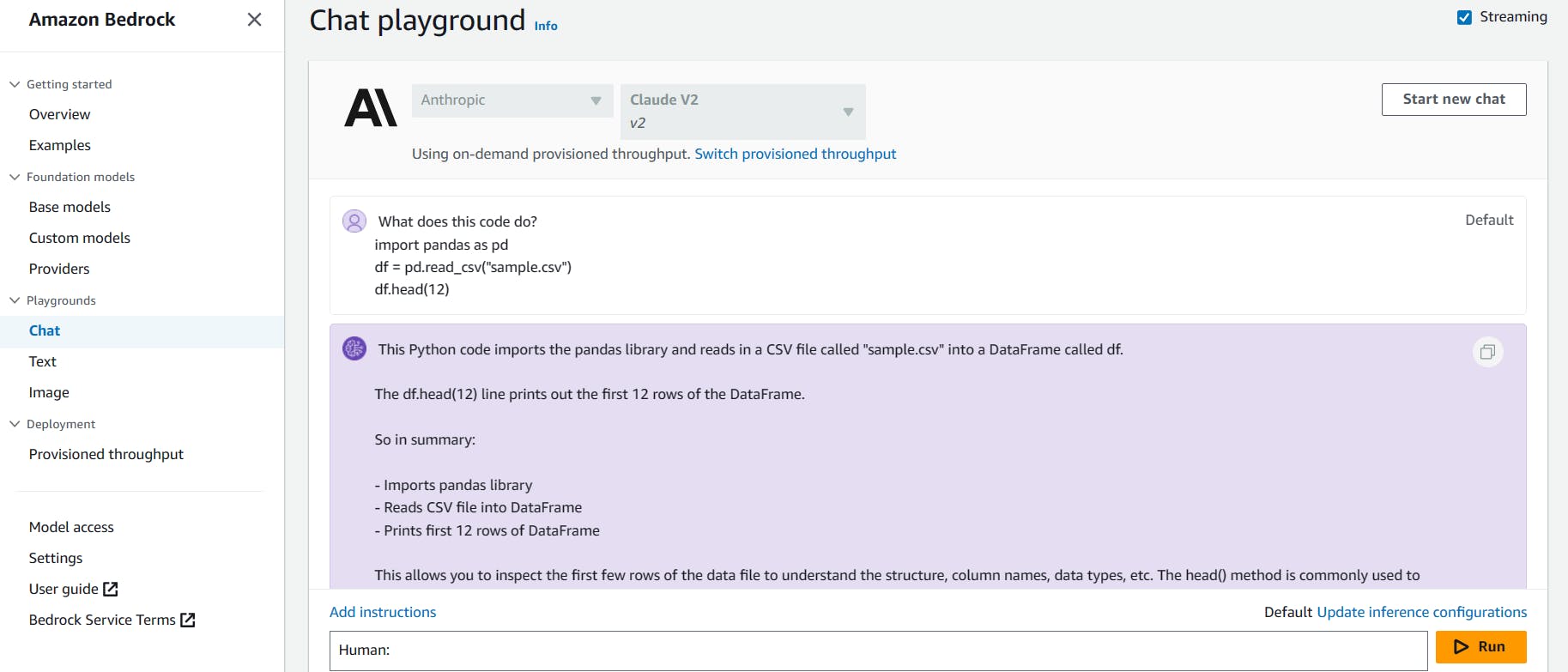

Play and Explore: Not entirely sure of a model’s capability? Fret not! The Amazon Bedrock dashboard features a playground that lets you test and tweak it to understand its full potential.

Embed in Your App: Once you’re satisfied, use AWS APIs like boto3 to integrate the model into your application. Need some guidance? The official AWS repository has got you covered with examples for each model: Amazon Bedrock Workshop on GitHub.

Example usage of Amazon Bedrock on its Playground page for text and image generation

Why Should You Hop on the Bedrock Train?

Simplicity and integration are at the heart of Amazon Bedrock. It offers a unified API for both text and image generation that dovetails perfectly with the AWS ecosystem. This means no more reliance on third-party APIs that might take you out of the AWS environment. The result? A sturdier, more flexible, and future-proof product.

What Models currently Bedrock supports?

Titan by Amazon: A versatile tool for tasks like text generation, classification, question answering, information extraction, and personalized text embeddings.

Jurassic by AI21 Labs: Ideal for varied language tasks, including (but not limited to) text generation, summarization, and question answering.

Claude by Anthropic: This model shines in areas such as thoughtful dialogue, complex reasoning, content creation, and even coding! Its strengths lie in Constitutional AI and harmlessness training.

Command by Cohere: Tailored for businesses, this model excels at generating text-based responses based on prompts.

Llama 2 by Meta: Perfectly fine-tuned for dialogue-centric applications.

Stable Diffusion by Stability AI: For the visually inclined, this image generation model crafts stunning visuals, artwork, logos, and designs.

What’s the Cost?

Amazon Bedrock offers a two-tiered pricing structure. Users can either opt for:

- On-Demand: A flexible pay-as-you-go option without the strings of time-based commitments.

The on-demand pricing is roughly the same as what each of these companies (e.g. Anthropic, Stability, …) offer as API cost on their own platform.

- Provisioned Throughput: For those seeking assured performance to match their application’s demands in return for a time-based commitment.

This can save you a lot of money if you have a predictable workload.

Conclusion

In conclusion, If you want a seamless, AWS-native way to add Generative AI to your application, Amazon Bedrock is definitely something you need to check out. With its ease of use, diverse model offerings with customization options, and special pricing for provisioned workload, it’s something worth exploring!