Introduction

Last week, an AI painting created by the Stable Diffusion model gained so much attention on social media because of its eye-catching nature. MrUgleh, the creator of this image, shared his workflow in this Reddit post so other people can reproduce it. Here, we first discuss this new genre of AI art, then tell you how you can create such artwork on your own machines.

Controlism, a new AI Art genre!

Artists have historically embedded secondary messages or patterns within their paintings. However, with advancements in AI, a new genre in AI Art is emerging.

“Human Being” Painting by M. Farshchian vs. ControlNet Picture AI Art - Original Face used as Pattern Here, Image by DreamingTulpa

Over the past few years, Stable Diffusion models have democratized art creation, enabling even those without artistic backgrounds to produce beautiful paintings simply by describing them to an AI. One intriguing application of these models is creating non-canonical but functional QR-code art.

Sample QR-Code Created using ControlNet, More Pictures

Using the same principle of guiding the StabelDiffusion into using a pattern (here, it was originally the QR-Code pattern but got generalized to any pattern), we can create such cool images; credit to MrUgleh for discovering this workflow!

Original MrUgleh AI Arts

How Stable Diffusion works, simply put!

Stable Diffusion is a type of artificial intelligence (AI) model that can generate images from text descriptions. It works by starting with a random noise image and gradually adding details, guided by the text description.

The model is trained on a massive dataset of images and text descriptions, which allows it to learn the relationship between words and images. When you give the model a text description, it uses its knowledge to generate an image that matches the description as closely as possible.

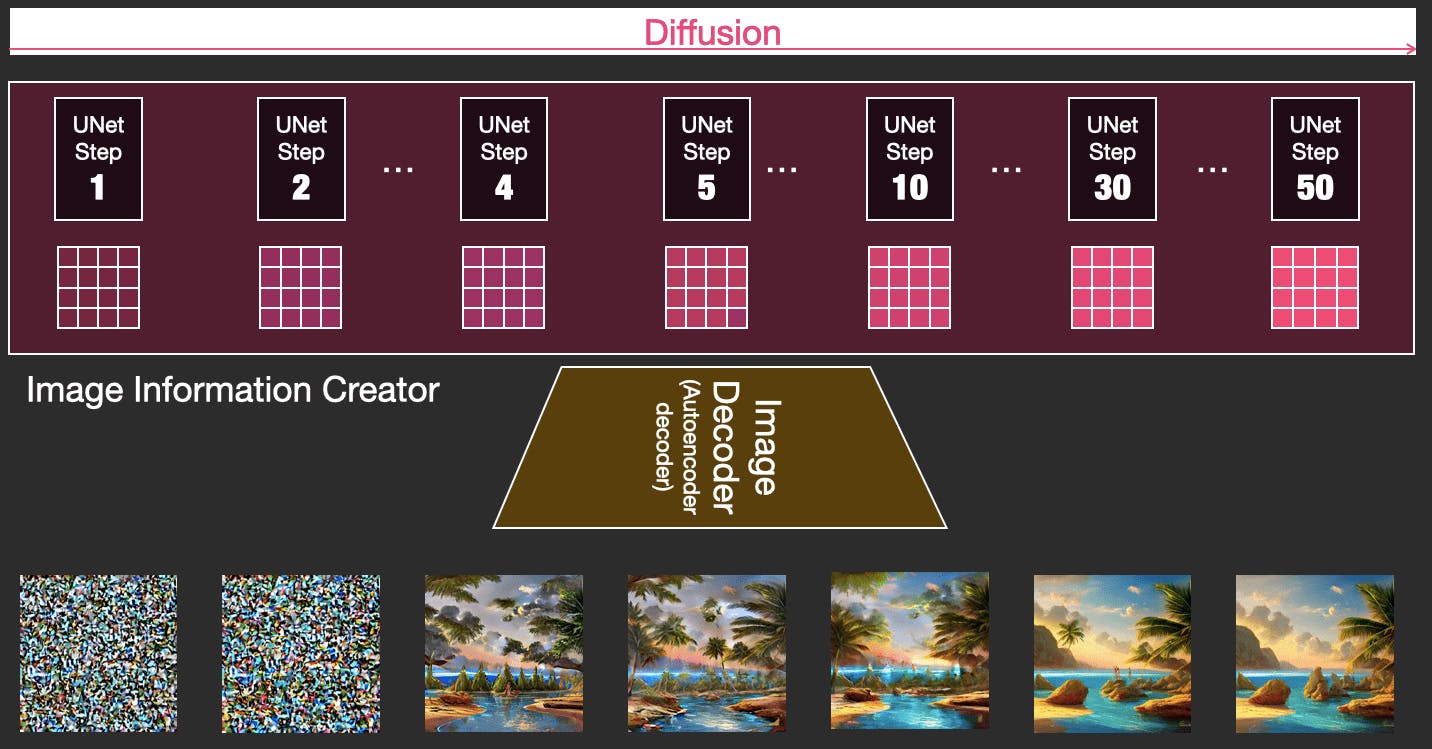

Here is a simplified explanation of how Stable Diffusion works:

The model starts with a random noise image.

It then applies a series of diffusion steps to the image, gradually adding details to it. At each diffusion step, the model uses the text description to guide the addition of more information.

The model continues to diffuse the image until it is satisfied with the result.

Example of Denoising, Image from The Illustrated Stable Diffusion – Jay Alammar

ControlNet is a neural network model that can control Stable Diffusion models. It can be used to control Stable Diffusion in a variety of ways. For example, it can be used to:

Specify the composition of the image, such as the placement of objects and the background.

Control the style of the image, such as whether it is realistic or cartoonish.

Generate images from reference images.

Generate images with specific attributes, such as a certain color palette or texture.

ControlNet significantly improves Stable Diffusion by giving users more control over image generation. This is how we can use a pattern image to guide the model to create images we like.

How To Create Controlism, AI Art?

Depending on your familiarity and expertise, there are several ways to dabble in Controlism AI art:

- Simple Route: HuggingFace Space

Use this HuggingFace space developed by AP; the only pitfall is that it will be temporary, so if you are reading this article in the far future, this space is probably closed by then! You can still duplicate it and run it at your own cost. (HF charges for GPU spaces starting from 0.6 to 3.15 $/hour for small to big GPUs, or if you have GPU, clone the repo and run it locally.)

- Robust Approach: StableDifussion WebUI

Running this needs GPU so if you don’t have it you can use online services like RunDiffusion.

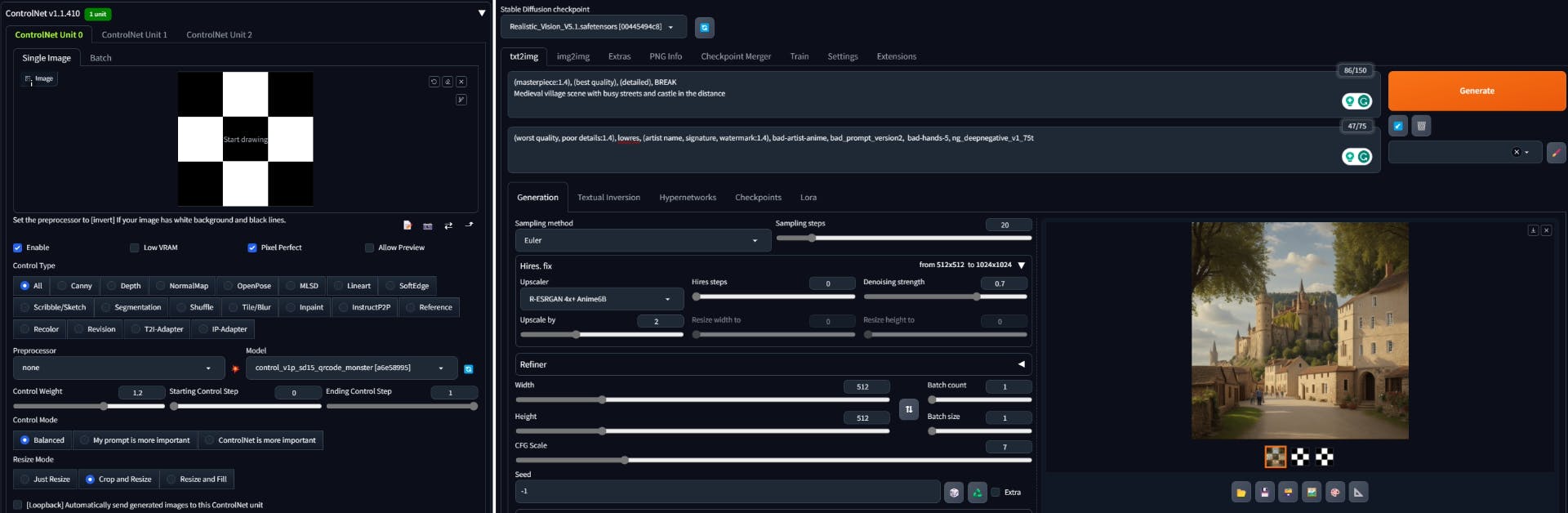

The main way of creating such artworks with Stable Difussion is using the StableDifussion WebUI (also know as A1111) tool. Then, inorder to create Controlism AI Art, we can add the ControlNet plugin to it. Follow these steps to set up your A1111:

- Install stabledifussion-webui

You need to have Python 3.10 installed (No newer or older versions)

- Install controlnet extension

Here is the instruction on how to add ControlNet extension to a1111: Mikubill/sd-webui-controlnet: WebUI extension for ControlNet (github.com). You basically need to paste this URL in the Extension tab and click add.

- Download needed models

You need to download a base StableDifussion model and a ControlNet model. The best ones I’ve found so far are:

SD (put this in the models/StableDiffusion folder): Realistic_Vision_V5.1_noVAE ControlNet (put this in the models/ControlNet folder): control_v1p_sd15_qrcode_monster

Note these are large files (around 5 GB total)

Finally, generate the images using the right parameters. Parameters here can play an important role, Here is the best one I found to generate images such as the one Mr.Ugleh created: Control Strength: 1.2, Pixel Perfect: True, In the end, your a1111 page should look like this:

- The Proprietary Method: ArtBreeder The ArtBreeder platform lets you generate ControlNet images based on your patterns and prompts. Available at ArtBreeder.com

Conclusion

Generative AI is advancing at a staggering pace, making it challenging to keep up with its latest innovations. As its quality improves and its capabilities diversify, it paves the way for applications in a broader range of fields, potentially expanding its market reach. In this article, we’ve explored one such innovation and provided insights on how you can experiment with it and potentially integrate it into your products.